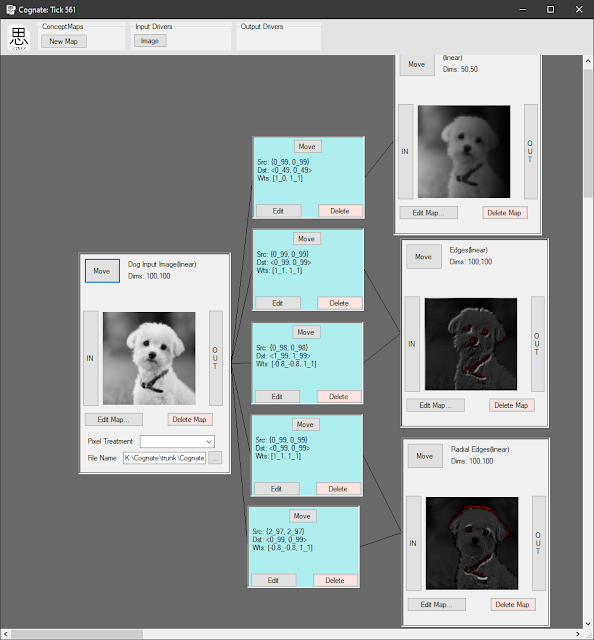

TWIT Multi Thread Works!!!!!

Ok, I got multi threading into TWIT C. It can have any power of 2 number of threads in the set of 1,2,4,8,16,32,64,128,256. On my machine at 8 threads it is 0.49 milliseconds per twit on my benchmark. The core is now done and unit tests pass. On to Cognate, SRS, and NuTank.....